Nvidia’s ever-optimistic CEO, Jensen Huang, has revealed more details of the company’s next-gen AI platform at the same GTC event where the company’s quantum computing plans were outlined. He calls it the Vera Rubin “superchip” and claims it contains no fewer than six trillion transistors. Yup, six trillion.

In very simple terms, that means the Vera Rubin superchip sports 60 times the number of transistors of an Nvidia RTX 5090 gaming GPU. And that is a completely bananas comparison.

N3 is more dense than N5, for sure. But not anything like enough to go from about 100 billion to six trillion transistors. Actually, it turns out that Vera Rubin isn’t just more than one chip, it’s more than one chip package.

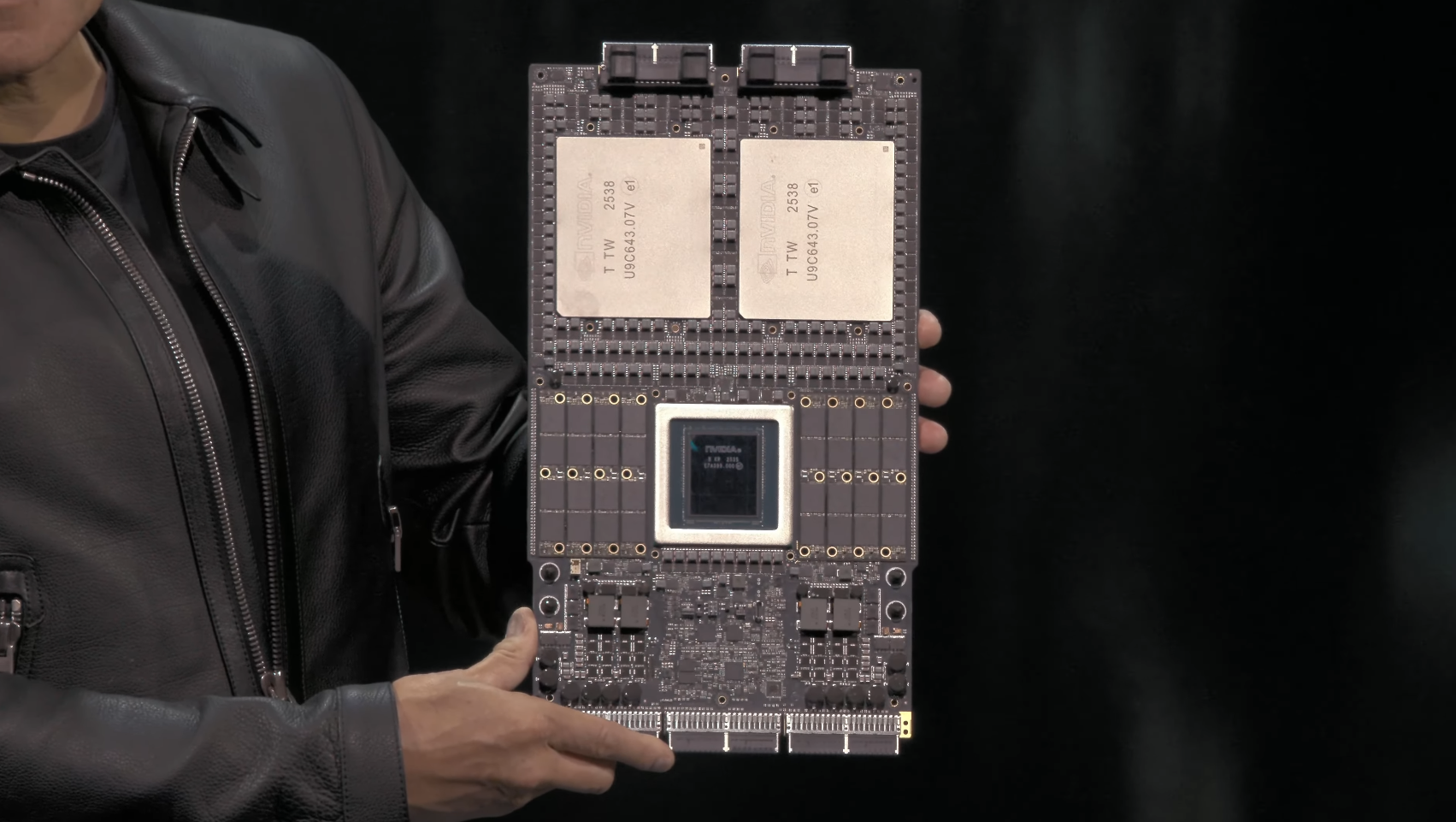

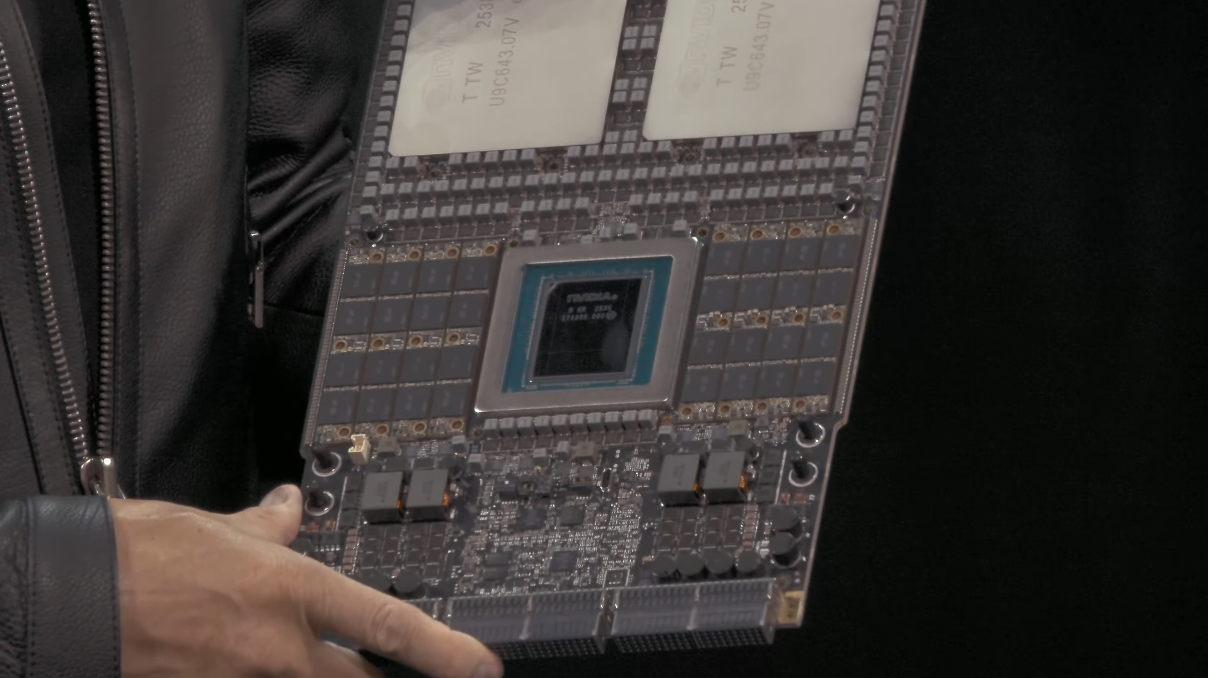

Huang held up an engineering sample of Vera Rubin and what’s clear to see are the three main chip packages, one for the CPU and two GPU packages. In a later part of the presentation, it becomes clear that each GPU package actually contains two GPUs. So that’s four GPUs total, plus the CPU. A close up on the Vera CPU package also reveals it to be a chiplet design, further elevating the overall transistor count headroom.

That said, each Rubin AI GPU die is monolithic and described as reticle-sized, which means it’s as big as TSMC’s production process will allow. But even if you were generous and assumed that TSMC could squeeze 200 billion transistors into a single monolithic chip using N3, you’d need 30 such chips to get to that six trillion figure.

Actually, Nvidia also revealed a “Rubin Ultra” GPU package with four Rubin GPU dies, so this stuff is just going to scale and scale. But, still, it’s unclear how Nvidia is doing its transistor count. I’d have to assume that everything, including all the memory for the GPUs and CPU, plus the supporting chips on the Vera Rubin motherboard, is thrown in.

However Nvidia is coming up with the numbers, the Vera Rubin AI board (as opposed to “chip” as Huang is calling it), is an unfathomably complex thing. Indeed, Huang says it’s 100 times faster than the Nvidia equivalent product from nine years ago, the DGX One, which Huang says he delivered to what must then have been a nascent OpenAI.

The rest, as they say, is history. And trillions and trillions of transistors. And trillions of dollars for Nvidia. For the record, Huang says Vera Rubin goes into production “this time next year, maybe even slightly earlier” and it probably won’t be cheap. So get saving up.

Best mini PC 2025